It would be redundant to mention that the N.C. State men’s basketball team is ranked No. 19 in The Associated Press’ top-25 poll. We all have memorized and boasted about our school’s stellar sports ranking, but you would be humbled to learn that our university is only 106th on the 2013 U.S. News & World Report list of best colleges in the nation. To put this in perspective, Duke is ranked eighth and UNC-Chapel Hill 30th. Before you withdraw yourself from this pathetic, not-even-top-100 university and begin dressing yourself in any shade of blue, let us analyze the meaning and purpose of these rankings.

Many of the statistics that define a university are purely related to the high school students it admits. For example, the N.C. State admissions website features tables showing the GPA, class rank and SAT scores of accepted students. And though many people use this as the basis to judge a university’s difficulty, these statistics tell nothing of student activity while in college. Success in high school does not necessarily equate to success in college. As the saying goes — which Technician doesn’t endorse — “Cs get degrees, and a doctor who graduates last in his class is still called ‘doctor.’”

Fewer than half of those who applied to N.C. State in 2012 were admitted. Selectivity is a source of pride for students who are accepted, but it may not always paint an accurate picture of the university’s merit. Mark Gordon, president of the astutely named Defiance College in Ohio, suggested, “If a school wants to move up in the rankings by appearing more selective, they simply decrease the acceptance rate by encouraging more applications from precisely those students they know they will not admit.”

Thus, college statistics could be manufactured.

George Leef of Carolina Journal Online wrote, “In preparing its rankings, U.S. News relies on six factors. Four of those factors are input measurements: financial resources, alumni giving, faculty resources and student quality. One is an output measurement (student retention and graduation rates) and one is a subjective guess (academic reputation). Not one of the factors purports to measure the thing that academic quality is centrally about, namely learning.”

Why would U.S. News use an admittedly subjective ranking like word-of-mouth reputation in the ranking at all?

Leef also contributed to the Pope Center for Higher Education Policy’s 2004 report, titled “Do College Rankings Mean Anything? Why rankings by U.S. News and others are deeply flawed.” He and co-author Michael Lowrey concluded, “More highly ranked colleges and universities do not necessarily offer a better academic experience than do schools with lower rankings.” Therefore, parents and students should not be fooled by what Leef and Lowrey call “facile and formulaic rankings.”

Furthermore, successful people will be successful regardless of where — or even if — they go to college. Bill Gates and Mark Zuckerberg were both Harvard dropouts. Harvard, then, was not essential in their billion-dollar successes. In this way, the name of the university should not matter as much as the rigor and determination of the individual students.

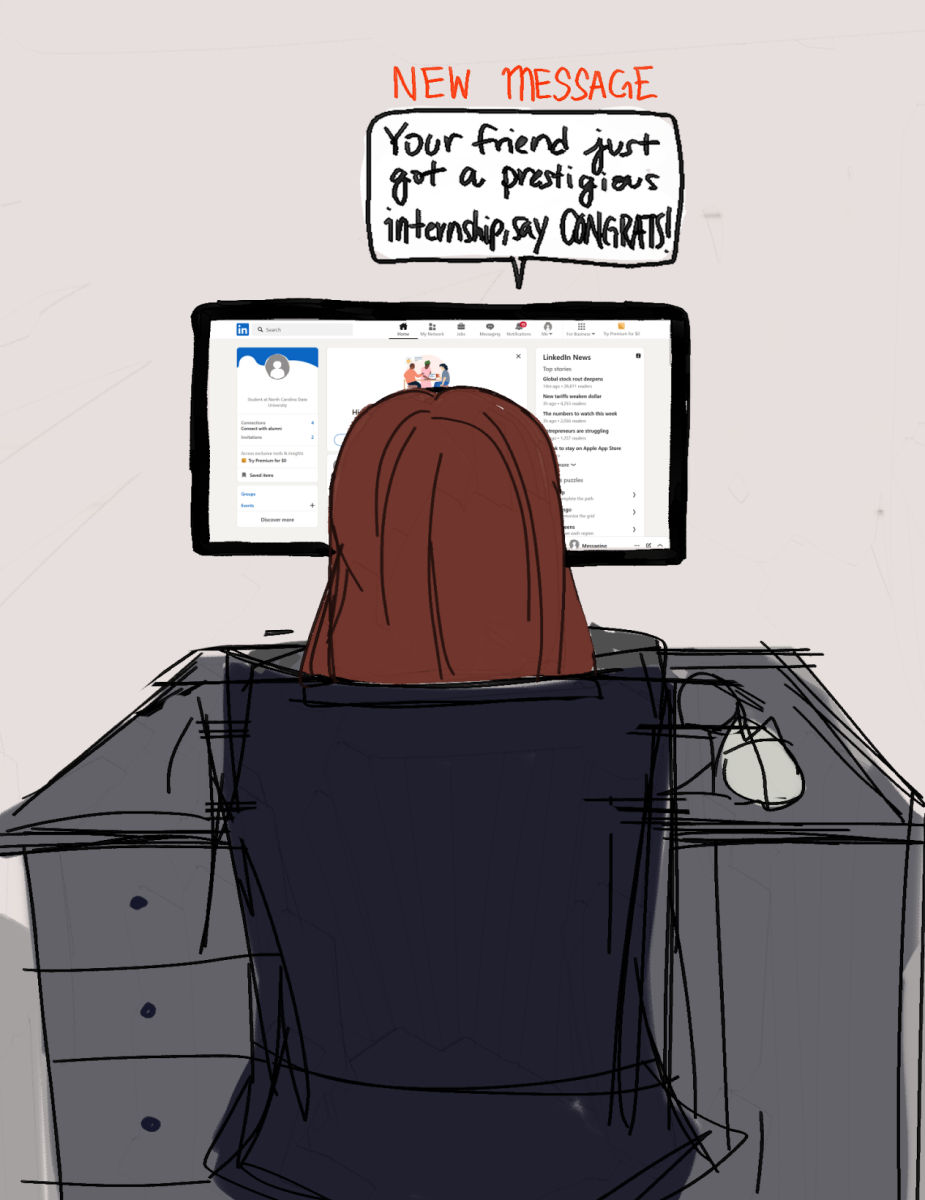

In contrast, the merit that comes with a university’s name is an important factor in job placement. Consequently, universities walk a fine line. Rankings are important when it comes to recruiting students but, in many ways, say nothing about the quality of education the university provides.

If we must look at rankings, let us be defined not by the students we accept, but by the success of the students we graduate. In the end, college isn’t about how you enter but how you come out.