As generative AI platforms — a form of artificial intelligence that produces human-like responses — and tools become more accessible in the classroom, professors express a variety of concerns while adapting to the new presence.

Kathryn Stolee, associate professor in the Department of Computer Science, said AI analyzes existing data to create and develop a response.

“It’s effectively large models of code that have been trained on large amounts of data that’s in the public domain, which is to say they’ve built models of the language that people have used based on what is available and out there,” Stolee said. “And so what it’s able to do is recall connections between things. So if you ask it a question, it can go back and generate, using multiple data sources, things that are common to produce to you.”

Concerns about generative AI’s role in academia have been contested, with critics arguing it enables plagiarism. However, many NC State professors have embraced generative AI as a learning tool.

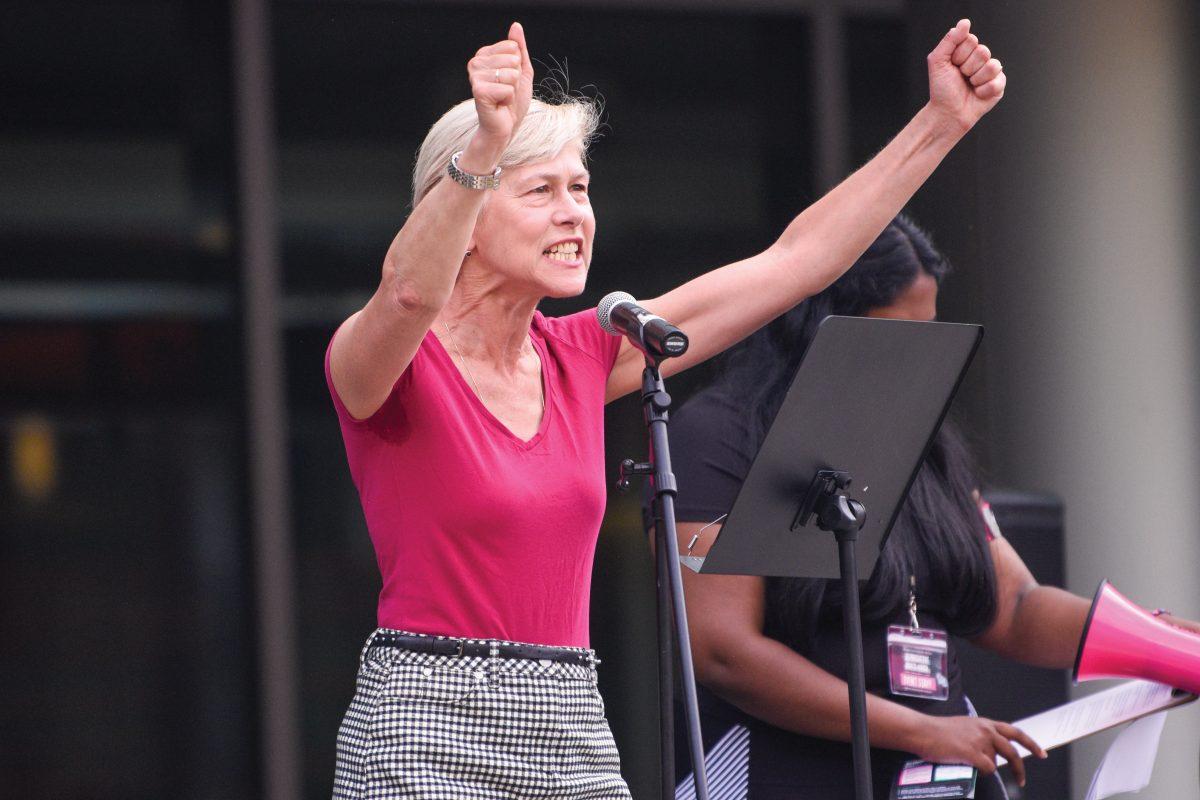

Paul Fyfe, an associate professor in the Department of English, integrates generative AI into his curriculum, using it to show students the challenges and limitations of the software.

“I’ve asked students to go ahead and use it to cheat on their final papers and then to follow up with writing reflections about well, did it even work?” Fyfe said. “What was it like to work with this as a writing partner? Do you feel like the content was your own? To what degree is this your writing? And this exercise where students engage with it actually reveals better than I could the limitations and problems of working with AI, as well as, for some, the real opportunities of how it might help them in particular ways.”

Stolee said the benefits AI poses in computer science help students learn new programming languages. However, she also points out AI’s shortcomings as a learning tool.

“I have the students work out a kind of thought experiment and put their own thoughts into it, and then ask ChatGPT what it would do instead,” Stolee said. “Then you can see how what you said is relative to your context, and how much ChatGPT said might not be right, as a way to highlight the limitations.”

However, Stolee said AI has a variety of shortcomings such as images, information or citations that are non-existent which she called hallucinations and equates to being a form of misinformation. This can be misleading, as the answers can sound authoritative.

David Rieder, a professor in the Department of English, said he predicts generative AI will solidify its place in the classroom, despite pushback from professors.

“I’m reminded of a couple of years ago, there was a historical parallel being made between the introduction of calculators in math classes and these large language models in, let’s say, an English class,” Rieder said. “There were protests and math teachers picketing. And obviously, calculators have a place in a math curriculum. I think likewise, large language models are basically our calculators, and so we’re going to have to find a way to make them fit.”

Fyfe said AI literacy will be needed to understand how to utilize AI as a tool in the classroom.

“So all of this leads to a broader mission, if not even a crisis, in establishing what people have called AI literacy for students as well as for faculty and instructors about the potentials and the pitfalls of these technologies,” Fyfe said. “As well as the appropriate kind of guidelines and norms for using them.”

Rieder said he aims to teach students how to critically engage with AI in his classroom.

“You can ask it to give you a basic recipe or write a simple poem or something, but to do quality research, specialized work, it takes a lot of work to write the right kinds of prompts, to learn a variety of strategies to engage with these machines,” Rieder said.