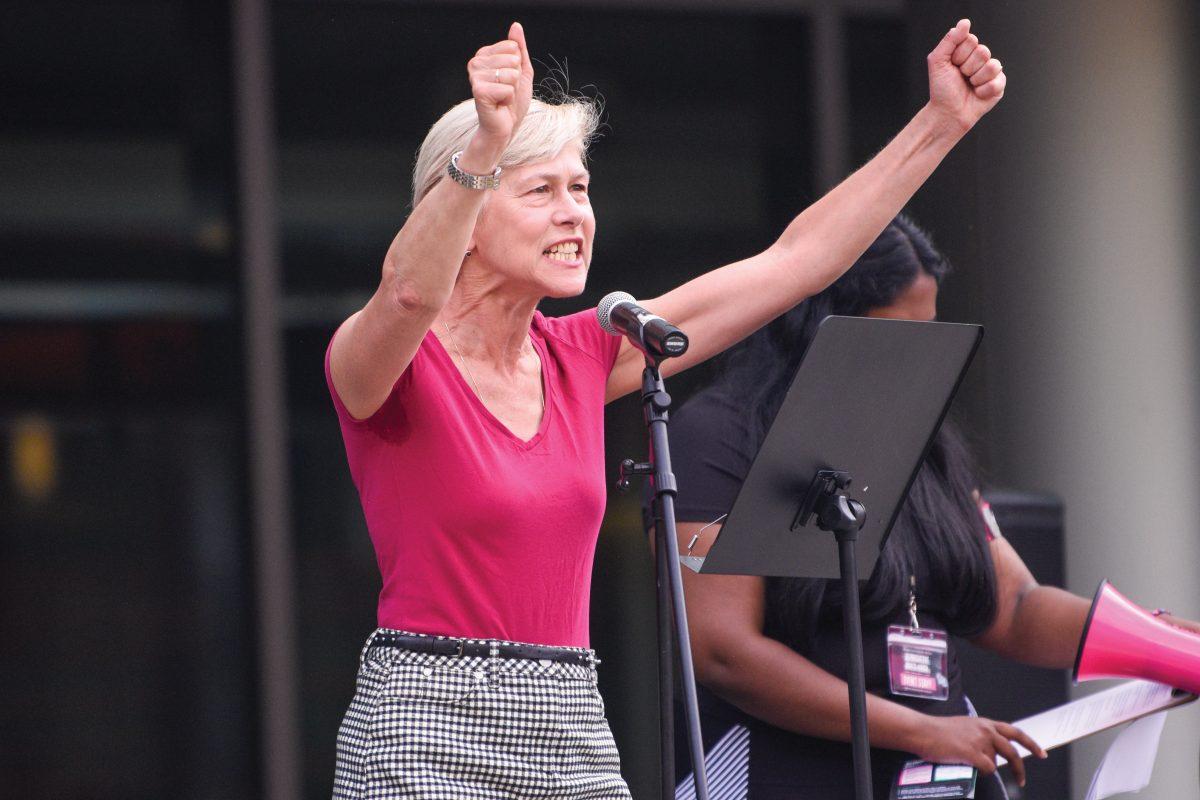

Paul Fyfe, associate professor in the department of English, held a talk on artificial intelligence’s impacts on teaching and research in Caldwell Lounge on Oct. 24.

Fyfe has worked on a variety of technological topics and their relevance to English and teaching. In his discussion, he said it’s become close to impossible to distinguish between human-written texts and AI-generated texts.

“ChatGPT and similar AI models don’t learn a language as we do, but they learn the statistical relations among words,” Fyfe said. “It´s a probabilistic guess, a prediction of words. … The key thing is that such AI uses previous context to make predictions. So instead of spitting out grammatically correct nonsense, it generates semantic coherence.”

Fyfe teaches a class that explores the implications of language-based AI models, such as ChatGPT, for research and teaching. In his talk, he said he encouraged his students to use ChatGPT for some of the writing in this course to demonstrate its unreliability.

“In one of my classes, I asked my students to cheat on their final essay,” Fyfe said. “Students found it harder to write a paper with AI than just writing it themselves.”

Fyfe said his students had to be very specific in what they wanted ChatGPT to write and experiment with their prompts.

“Some students said that they would compare collaborating with ChatGPT to being teamed up with the class slacker,” Fyfe said.

Fyfe’s students recognized false information in the responses of ChatGPT and the problem of algorithmic bias, he said.

“Programs like these do contain unjust prejudice from the data that they were trained on,” Fyfe said.

Fyfe said he thinks AI literacy is an important skill for college students.

“Students are not getting this kind of AI literacy in the curriculum yet,” Fyfe said “I don’t know where else in the University students learn about AI literacy, and it is vital for students to develop this skill set.”

Thomas Hardiman, director of the Office of Student Conduct, said it is up to instructors to decide whether their students are allowed to use AI tools in the classroom, and its use doesn’t violate the Code of Student Conduct if authorized by the instructor.

Fyfe asked participants to compare “bot or not” writing excerpts. The audience could not identify the difference between the texts, which Fyfe said proved the sophistication of ChatGPT.

Fyfe said this experiment was a version of the “Turing test,” which examines to what extent artificial intelligence can pass for a human.

“The question is no longer whether or not AI can pass the Turing test,” Fyfe said. “The question is what do we do now that they can.”

After the talk, different participants shared their personal points of view and the challenges AI poses to them. One of the participants was Amber Holland, lecturer in the communication studies department at NC State. Rather than being concerned about AI’s use in the classroom, Holland said she was excited to learn more about it.

“I would love to have my students use this in the classroom, but also to use it in an ethical way,” Holland said. “Today, I learned that there is a lot more to learn and that I want to be proactive about initiating conversations in my classroom about it.”